“In a cutting-edge development that signals a major shift in computing, Google’s Quantum AI division has revealed what it describes as “quantum echoes” on its latest hardware platform, the Willow chip—pointing toward next-generation innovations in artificial intelligence, materials science, and molecular engineering.

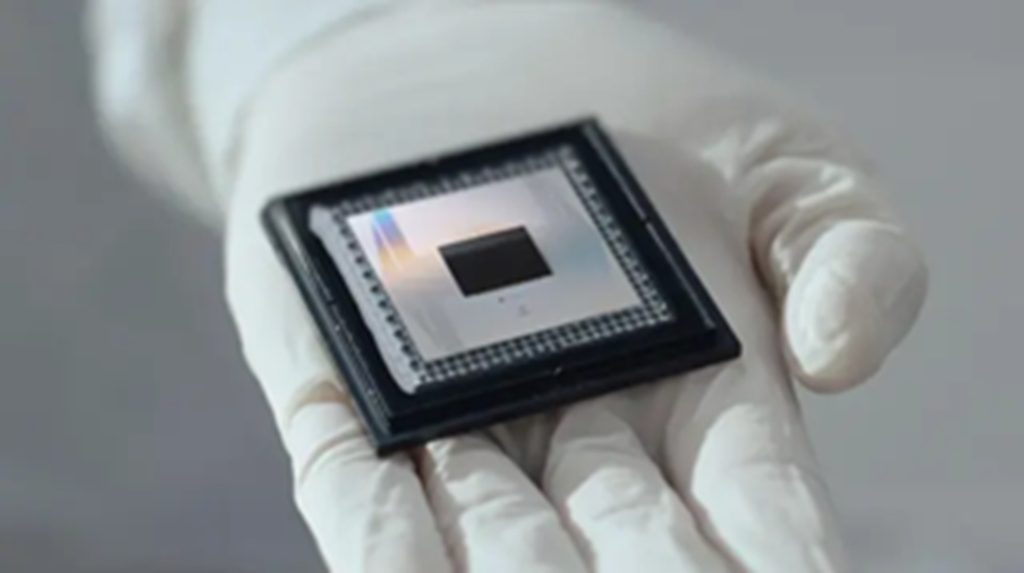

The Willow chip, a 105-qubit superconducting quantum processor, represents Google’s most advanced effort yet to push the boundaries of quantum performance and stability. The company reports that the chip has achieved significant performance and error-correction milestones, executing benchmark tasks in minutes that would take classical supercomputers billions of years. The term “quantum echoes” refers to recurring interference patterns detected in the quantum states of the chip’s qubits—patterns that may be harnessed through a new algorithm of the same name to accelerate progress toward practical quantum computing.

According to Google’s researchers, these quantum echoes provide verifiable and reproducible data outputs, a key requirement for any usable quantum system. Their tests indicate that the new algorithm running on Willow performs certain operations thousands of times faster than even the most advanced classical algorithms. Published data describe how expanding qubit arrays and improving error-correction techniques lead to an exponential reduction in logical error rates, paving the way for scalable, fault-tolerant quantum systems.

A particularly noteworthy milestone achieved on Willow is the demonstration of “below-threshold” quantum error correction—an essential benchmark showing that as more qubits are added, the overall logical error rate actually decreases. Google scaled the system from 3×3 to 7×7 encoded qubit lattices, marking one of the clearest demonstrations yet of progress toward practical fault-tolerant quantum computing.

The implications are vast. Google foresees near-term applications in quantum simulation of molecular systems, discovery of new materials, next-generation battery chemistry, drug design, and hybrid quantum-classical AI systems. The “quantum echoes” algorithm, in particular, could enable richer data sets for training machine learning models and provide insights into molecular structures that are beyond the reach of traditional supercomputers.

However, experts caution that the technology is still at an early stage. While Willow appears to cross the threshold of “quantum supremacy”—performing a task no classical computer could feasibly match—it has not yet achieved “quantum advantage,” the point at which quantum processors solve real-world problems more efficiently than classical systems. With only 105 physical qubits and far fewer logical qubits, large-scale commercial applications remain several years away.

Google has also addressed concerns about cryptographic vulnerability, emphasizing that Willow is not capable of breaking modern encryption standards such as RSA. To reach that capability, experts estimate that millions of logical qubits would be required—far beyond current hardware limits.

Despite these caveats, industry analysts view Willow as a turning point. The achievement underscores the accelerating pace of quantum innovation and signals the need for organizations to prepare for a hybrid computing future that combines quantum and classical architectures. Businesses are being urged to explore post-quantum cryptography and develop early expertise in quantum algorithms and workflows.

In summary, Google’s Willow chip experiments and the discovery of quantum echoes represent a major step forward in the global race toward practical quantum computing. While the promise of universal, error-corrected quantum processors remains on the horizon, Willow stands as one of the most concrete demonstrations yet of scalable quantum control and verifiable quantum performance—key enablers of the next generation of AI, scientific discovery, and computational breakthroughs.”

About Author

You may also like

-

2 Lakh Educators Join CENTA TPO, World’s Largest Teaching Competition

-

Flower Show at Fateh Sagar Lake in Udaipur: Hindustan Zinc’s Stall Becomes a Major Attraction

-

Vedanta Presents Second Edition of Jaigarh Heritage Festival at Jaipur’s Iconic Fort

-

Cara Hunter Reveals How a Deepfake Video Almost Destroyed Her Political Career

-

UN Security Council Endorses Donald Trump’s Gaza Peace Plan Resolution Passes 13–0, China and Russia Abstain